Welcome to AIDA Defense

What Is AIDA?

AIDA isn’t coming—it’s already operational.

Adversarial AI is now capable of learning from encrypted data in transit. Every TLS handshake. Every "secured" connection. Every ciphertext packet. Today’s deterministic encryption protocols—like AES-256—are teaching adversaries more than they’re protecting.

This shift has redefined the battlefield.

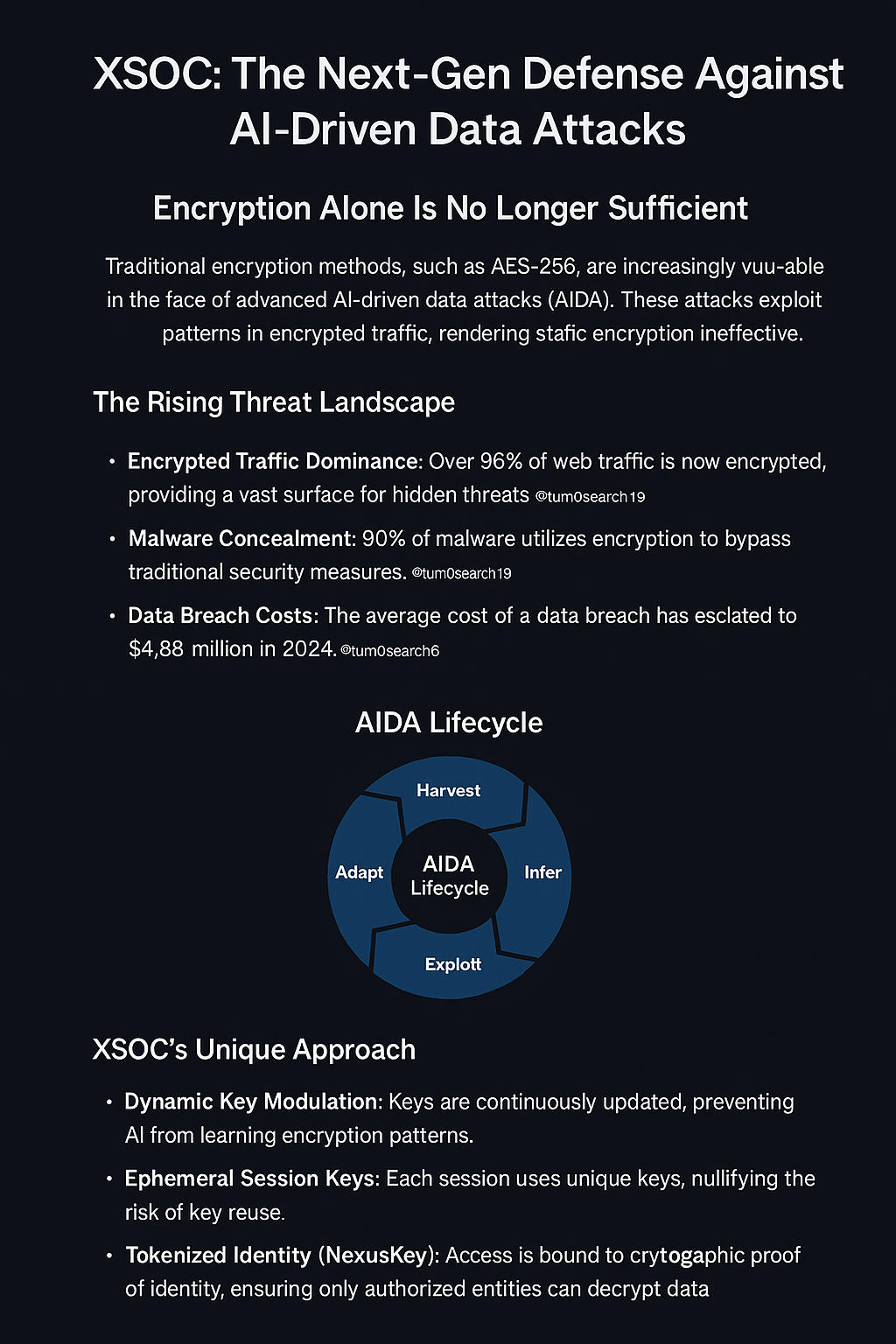

Pattern Inference Over Decryption: AI correlates encrypted metadata—packet size, timing, frequency—to reconstruct behavioral profiles and infer sensitive operations.

Recursive Learning: Models like DeepSeek iteratively improve by harvesting and refining inputs in real-time—no need to store or decrypt.

Autonomous Exploitation: These systems detect weaknesses, generate tools, and deploy attacks at machine speed.

AI Is Not Waiting for Quantum. Neither Should We.

The Age of AI-Driven Data Attacks Has Already Begun

The cybersecurity community has spent the last decade preparing for quantum decryption. But the real threat isn’t quantum—it’s inference. And it’s already operational.

Artificial Intelligence-driven Data Attacks (AIDA) have arrived ahead of schedule, reshaping the battlefield before most organizations even knew it had changed.

“Harvest Now, Infer Immediately.”

These AI models don’t decrypt—they deduce.

They don’t crack the key—they learn the behavior.

They don’t wait—they exploit encrypted systems now.

Real-Time Adversarial Exploitation Has Replaced Future Decryption

Analyzing encrypted traffic in transit. TLS and SSL-protected traffic is rich with timing patterns, packet sizes, session behaviors—all of which reveal sensitive information even when encrypted.

Reconstructing data relationships. Recursive learning techniques can identify user behavior, location, transaction amounts, or communication structure—without needing the plaintext.

Feeding new inputs in real time. DeepSeek doesn't stockpile intercepted data—it trains on it live, adjusting its inference models as data flows through global fiber routes and compromised routing hardware.

Bypassing encryption completely. Adversarial AI uses metadata and signal analysis to map behaviors that were once assumed hidden behind cryptographic walls.

AIDA ≠ Quantum Threats — It’s Faster, Smarter, and Already Operational

Machine-speed inference over brute-force decryption

Pattern modeling over key recovery

Metadata and ciphertext structure over private key theft

Real-time recursive adaptation over delayed post-processing

No waiting required. AIDA operates today. Post-quantum decryption timelines are speculative.

No forensic trail. Inference leaves no cryptographic signature, unlike decryption logs or brute force tools.

Dual-use efficiency. The same AI engines used for attacking Western encryption also enhance domestic surveillance infrastructure like China’s Social Credit System.

Why NIST PQC Algorithms Are Not AIDA-Resistant

Yes, NIST’s post-quantum algorithms—like CRYSTALS-Kyber and CRYSTALS-Dilithium—are quantum-resistant.

But they are not AIDA-resistant.

These algorithms were designed to withstand the mathematical intensity of Shor’s and Grover’s quantum algorithms, not the recursive pattern modeling of adversarial AI systems trained on petabytes of encrypted traffic.

Kyber's reliance on module lattices introduces deterministic algebraic structures—ripe for AI modeling over time.

Even with QRNG-based keys, the transformation processes in PQC are static.

AI doesn’t need the key—it just learns the rules.

Deep learning models enhanced by rStar-Math have shown the ability to extract key material using power, timing, and EM analysis—even on masked Kyber implementations.

PQC signatures exhibit behavior over time that recursive AI can predict. Without ACL binding (as in XSOC HyperKey), private keys can be approximated via inference modeling.

The Illusion of Safety in TLS, SSL, and Post-Quantum Adoption

Many organizations assume they're “safe” because they use TLS, SSL, or are migrating to NIST PQC.

But AIDA exploits the illusion of security—not its absence.

Encrypted doesn’t mean invisible.

TLS handshake patterns expose session types.

Certificate chains expose trust relationships.

Ciphertext length and repetition reveal transaction context.

All of this fuels recursive adversarial models like DeepSeek.

The safest data to AI is the data you assume is untouchable.

That’s where AIDA goes to work.

Only XSOC Neutralizes the AIDA Threat

XSOC doesn’t replace PQC.

It fortifies it—with AI-resilient encryption that AIDA can’t study, learn from, or infer.

Dynamic Key Modulation: No fixed key states. No predictable encryption structure.

Ephemeral Session Keys: Zero reuse. No statistical modeling window.

Tokenized Identity (NexusKey): AI can’t simulate access without cryptographic proof of identity.

No lattice structures, no predictable S-Boxes, no static modes.

XSOC breaks the inference chain—at the root.

No static patterns. No modellable behavior. No training dataset to steal.